DAA Interview Questions and Answers – DAA (Design and Analysis of Algorithms) is a critical aspect of computer science and programming that deals with creating efficient and effective algorithms for solving complex problems. The process involves designing an algorithm, analyzing its efficiency, and optimizing it for better performance. The study of DAA is crucial for anyone seeking a career in software development or computer science, as it helps in understanding the fundamental principles of problem-solving and algorithmic design. So candidates, who are appearing for the interview can have a look at these Top 100 DAA Interview Questions and prepare well for the interview.

DAA Interview Questions and Answers

In this article, we will explore the DAA Interview Questions that are frequently asked in the interview. So, let’s delve into the world of DAA and equip ourselves with the necessary knowledge and skills to tackle complex programming challenges and get succeed in the interview.

Top 100 DAA Interview Questions and Answers

1. What is an algorithm?

Answer: An algorithm is a step-by-step procedure for solving a problem or accomplishing a task.

2. What are the characteristics of a good algorithm?

Answer: A good algorithm should be correct, efficient, and easy to understand.

3. What is the difference between an algorithm and a program?

Answer: An algorithm is a step-by-step procedure for solving a problem, while a program is an implementation of an algorithm in a particular programming language.

4. What is the time complexity of an algorithm?

Answer: The time complexity of an algorithm is a measure of the amount of time it takes to run as a function of the size of the input data.

5. What is the space complexity of an algorithm?

Answer: The space complexity of an algorithm is a measure of the amount of memory it requires as a function of the size of the input data.

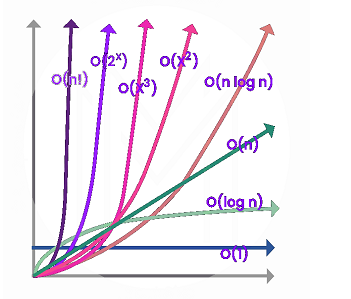

6. What is the Big O notation?

Answer: The Big O notation is used to describe the upper bound on the time complexity of an algorithm.

7. What is the worst-case time complexity of an algorithm?

Answer: The worst-case time complexity of an algorithm is the maximum amount of time it takes to run

over all possible inputs of a given size.

8. What is the best-case time complexity of an algorithm?

Answer: The best-case time complexity of an algorithm is the minimum amount of time it takes to run over all possible inputs of a given size.

9. What is the average-case time complexity of an algorithm?

Answer: The average-case time complexity of an algorithm is the expected amount of time it takes to run over all possible inputs of a given size.

10. What is the difference between the best-case and worst-case time complexity of an algorithm?

Answer: The best-case time complexity is the minimum amount of time an algorithm can take to run, while the worst-case time complexity is the maximum amount of time an algorithm can take to run.

11. What is the difference between the average-case and worst-case time complexity of an algorithm?

Answer: The worst-case time complexity is the maximum amount of time an algorithm can take to run, while the average-case time complexity is the expected amount of time an algorithm will take to run.

12. What is the difference between time complexity and space complexity?

Answer: Time complexity measures the amount of time an algorithm takes to run, while space complexity measures the amount of memory an algorithm requires.

13. What is the difference between an array and a linked list?

Answer: An array is a contiguous block of memory used to store a collection of data items, while a linked list is a data structure in which each data item is stored in a separate node that contains a reference to the next node.

14. What is a binary search?

Answer: A binary search is an algorithm that searches for a particular value in a sorted array by repeatedly dividing the search interval in half.

15. What is a sorting algorithm?

Answer: A sorting algorithm is an algorithm that puts a collection of data items into a specific order, such as alphabetical or numerical order.

16. What is the difference between a stable and an unstable sorting algorithm?

Answer: A stable sorting algorithm preserves the relative order of equal elements in the sorted output, while an unstable sorting algorithm does not guarantee the relative order of equal elements.

17. What is the difference between top-down and bottom-up dynamic programming approaches?

Answer: The top-down approach starts from the main problem and recursively breaks it down into subproblems, while the bottom-up approach starts from subproblems and builds up to solve the main problem.

18. What is memoization in dynamic programming?

Answer: Memoization is a technique of storing the results of solved subproblems in a table to avoid their repeated calculation in future recursive calls.

19. What is the difference between dynamic programming and divide-and-conquer algorithms?

Answer: Dynamic programming involves solving subproblems and reusing their solutions to solve the main problem, while divide-and-conquer algorithms divide the problem into independent subproblems and solve them separately.

20. What is the time complexity of the brute-force approach?

Answer: The time complexity of the brute-force approach is typically O(n^n) or O(2^n), where n is the size of the input.

21. What is the difference between stable and unstable sorting algorithms?

Answer: Stable sorting algorithms preserve the relative order of equal elements in the input, while unstable sorting algorithms may not.

22. What is the time complexity of the quicksort algorithm?

Answer: The average-case time complexity of the quicksort algorithm is O(n*logn), where n is the size of the input.

23. What is the difference between in-place and out-of-place sorting algorithms?

Answer: In-place sorting algorithms sort the input array in place without using additional memory, while out-of-place sorting algorithms require additional memory to store the sorted output.

24. What is the time complexity of the mergesort algorithm?

Answer: The time complexity of the mergesort algorithm is O(n*logn), where n is the size of the input.

25. What is the difference between breadth-first search and depth-first search algorithms?

Answer: Breadth-first search explores the nodes in the graph in a breadth-first order, while depth-first search explores the nodes in a depth-first order.

26. What is the difference between a graph and a tree data structure?

Answer: A tree is a special case of a graph, where there are no cycles, and every pair of nodes is connected by a unique path.

27. What is the time complexity of the Dijkstra’s algorithm?

Answer: The time complexity of Dijkstra’s algorithm is O(E*logV), where E is the number of edges and V is the number of vertices in the graph.

28. What is the difference between a directed graph and an undirected graph?

Answer: A directed graph has directed edges, where each edge points from one vertex to another, while an undirected graph has undirected edges, where each edge connects two vertices without any direction.

29. What is the difference between a complete graph and a sparse graph?

Answer: A complete graph has all possible edges between every pair of vertices, while a sparse graph has relatively fewer edges.

30. What is the time complexity of the Bellman-Ford algorithm?

Answer: The time complexity of the Bellman-Ford algorithm is O(VE), where V is the number of vertices and E is the number of edges in the graph.

31. What is the difference between a greedy algorithm and a dynamic programming algorithm?

Answer: A greedy algorithm makes locally optimal choices at each step, while a dynamic programming algorithm solves subproblems and reuses their solutions to solve the main problem.

32. What is a divide-and-conquer algorithm?

Answer: A divide-and-conquer algorithm is an algorithm that recursively divides a problem into subproblems of smaller size, solves the subproblems, and combines the solutions to solve the original problem.

33. What is a greedy algorithm?

Answer: A greedy algorithm is an algorithm that makes locally optimal choices at each step, hoping to find a globally optimal solution.

34. What is dynamic programming?

Answer: Dynamic programming is an algorithmic technique that solves problems by breaking them down into smaller subproblems and storing the solutions to these subproblems to avoid redundant calculations.

35. What is backtracking?

Answer: Backtracking is an algorithmic technique that involves exploring all possible solutions to a problem by systematically trying different choices, and undoing choices that lead to dead ends.

36. What is memoization?

Answer: Memoization is an optimization technique that stores the results of expensive function calls and returns the cached result when the same inputs occur again.

37. What is recursion?

Answer: Recursion is a programming technique in which a function calls itself to solve a problem.

38. What is the difference between recursion and iteration?

Answer: Recursion involves calling a function from within itself to solve a problem, while iteration involves using loops to repeat a block of code until a condition is met.

39. What is the difference between top-down and bottom-up dynamic programming?

Answer: Top-down dynamic programming involves solving a problem by breaking it down into subproblems, while bottom-up dynamic programming involves solving the subproblems first and combining them to solve the original problem.

40. What is the Knapsack problem?

Answer: The Knapsack problem is a classic optimization problem in which a set of items with different weights and values must be packed into a knapsack of a given capacity, while maximizing the total value of the items.

41. What is the traveling salesman problem?

Answer: The traveling salesman problem is a classic optimization problem in which a salesman must visit a set of cities, each only once, and return to his starting point, while minimizing the total distance traveled.

42. What is the complexity of the brute-force solution to the traveling salesman problem?

Answer: The brute-force solution to the traveling salesman problem has a time complexity of O(n!), where n is the number of cities.

43. What is the greedy approach to the traveling salesman problem?

Answer: The greedy approach to the traveling salesman problem involves starting at a random city, and at each step, choosing the closest unvisited city as the next destination.

44. What is the complexity of the greedy approach to the traveling salesman problem?

Answer: The greedy approach to the traveling salesman problem has a time complexity of O(n^2), where n is the number of cities.

45. What is a heuristic?

Answer: A heuristic is a technique that is used to find a good solution to a problem quickly, but does not guarantee an optimal solution.

46. What is the A* algorithm?

Answer: The A* algorithm is a heuristic search algorithm that uses both the cost of the path already taken and an estimate of the cost of the remaining path to guide the search.

47. What is the difference between breadth-first search and depth-first search?

Answer: Breadth-first search explores all nodes at the same level before moving on to the next level, while depth-first search explores as far as possible along each branch before backtracking.

48. What is the difference between Dijkstra’s algorithm and A* algorithm?

Answer: Dijkstra’s algorithm is a shortest path algorithm that uses only the cost of the path already taken, while the A* algorithm uses both the cost of the path already taken and an estimate of the cost of the remaining path.

49. What is a hash table?

Answer: A hash table is a data structure that maps keys to values by using a hash function to compute an index into an array of buckets.

50. What is collision resolution in hash tables?

Answer: Collision resolution is the process of dealing with two or more keys that hash to the same index in a hash table. There are several techniques for collision resolution, such as chaining and open addressing.

51. What is the time complexity of a hash table operation?

Answer: The time complexity of a hash table operation depends on the specific operation and the implementation, but is typically O(1) on average.

52. What is a priority queue?

Answer: A priority queue is a data structure that stores a collection of elements and allows access to the element with the highest priority according to a specified priority function.

53. What is a heap?

Answer: A heap is a data structure that maintains a partially ordered tree in which each node is greater than or equal to its children (for a max heap) or less than or equal to its children (for a min heap).

54. What is the time complexity of a heap operation?

Answer: The time complexity of a heap operation depends on the specific operation and the implementation, but is typically O(log n) for insertion and deletion, and O(1) for accessing the maximum or minimum element.

55. What is a graph?

Answer: A graph is a data structure that consists of a set of vertices (nodes) and a set of edges that connect pairs of vertices.

56. What is a directed graph?

Answer: A directed graph is a graph in which each edge has a direction, indicating a one-way connection between two vertices.

57. What is an undirected graph?

Answer: An undirected graph is a graph in which each edge has no direction, indicating a bidirectional connection between two vertices.

58. What is a weighted graph?

Answer: A weighted graph is a graph in which each edge has a weight or cost assigned to it, indicating the cost or distance between the two vertices it connects.

59. What is a cycle in a graph?

Answer: A cycle in a graph is a path that starts and ends at the same vertex, and includes at least one edge.

60. What is a connected graph?

Answer: A connected graph is a graph in which there is a path between every pair of vertices.

61. What is a spanning tree?

Answer: A spanning tree of a graph is a subgraph that includes all the vertices of the graph and forms a tree (a connected acyclic graph).

62. What is the minimum spanning tree of a graph?

Answer: The minimum spanning tree of a graph is the spanning tree with the minimum sum of the weights of its edges.

63. What is Kruskal’s algorithm?

Answer: Kruskal’s algorithm is a greedy algorithm that finds the minimum spanning tree of a graph by repeatedly adding the next lightest edge that does not form a cycle.

64. What is Prim’s algorithm?

Answer: Prim’s algorithm is a greedy algorithm that finds the minimum spanning tree of a graph by starting at a random vertex and repeatedly adding the next lightest edge that connects a vertex in the tree to a vertex outside the tree.

65. What is the time complexity of Kruskal’s algorithm and Prim’s algorithm?

Answer: The time complexity of Kruskal’s algorithm and Prim’s algorithm is O(E log E), where E is the number of edges in the graph.

66. What is a topological sort?

Answer: A topological sort of a directed acyclic graph is a linear ordering of its vertices such that for every directed edge from vertex u to vertex v, u comes before v in the ordering.

67. What is the time complexity of a topological sort?

Answer: The time complexity of a topological sort is O(V+E), where V is the number of vertices and E is the number of edges in the graph.

68. What is the difference between breadth-first search and topological sort?

Answer: Breadth-first search is a graph traversal algorithm that visits all the vertices in the graph at a given distance (level) from a starting vertex before moving on to vertices at a greater distance. Topological sort, on the other hand, is a way of ordering the vertices of a directed acyclic graph such that for every directed edge u->v, u comes before v in the ordering. While both algorithms involve visiting the vertices of a graph, their purposes and methods are quite different.

69. What is Dijkstra’s algorithm?

Answer: Dijkstra’s algorithm is a greedy algorithm that finds the shortest path between a starting vertex and all other vertices in a graph with non-negative edge weights. It works by maintaining a set of vertices for which the shortest path is known, and repeatedly selecting the vertex with the shortest path and updating the shortest paths to its neighbors.

70. What is the time complexity of Dijkstra’s algorithm?

Answer: The time complexity of Dijkstra’s algorithm is O((V+E)log V) using a binary heap, where V is the number of vertices and E is the number of edges in the graph.

71. What is the Bellman-Ford algorithm?

Answer: The Bellman-Ford algorithm is a dynamic programming algorithm that finds the shortest path between a starting vertex and all other vertices in a graph with possibly negative edge weights. It works by repeatedly relaxing the edges in the graph, i.e., updating the shortest path estimate for each vertex based on the shortest path estimate of its neighbors.

72. What is the Floyd-Warshall algorithm?

Answer: The Floyd-Warshall algorithm is a dynamic programming algorithm that finds the shortest path between all pairs of vertices in a graph with possibly negative edge weights. It works by maintaining a table of shortest path estimates for all pairs of vertices, and repeatedly updating these estimates based on intermediate vertices.

73. What is the time complexity of the Floyd-Warshall algorithm?

Answer: The time complexity of the Floyd-Warshall algorithm is O(V^3), where V is the number of vertices in the graph.

74. What is the difference between dynamic programming and greedy algorithms?

Answer: Both dynamic programming and greedy algorithms are techniques for solving optimization problems, but they differ in their approach. Dynamic programming involves breaking a problem down into smaller subproblems and solving each subproblem only once, storing the solution in a table to avoid recomputation. Greedy algorithms, on the other hand, make the locally optimal choice at each step, without considering the global optimal solution. Dynamic programming is generally more computationally expensive than greedy algorithms, but can handle more complex optimization problems.

75. What is the principle of optimality?

Answer: The principle of optimality is a key concept in dynamic programming that states that an optimal solution to a problem can be constructed from optimal solutions to its subproblems.

76. What is a heuristic algorithm?

Answer: A heuristic algorithm is an algorithm that uses an approximate, “rule of thumb” approach to find a solution to a problem, rather than an exact method. Heuristic algorithms are often used when exact solutions are infeasible or too expensive to compute.

77. What is simulated annealing?

Answer: Simulated annealing is a probabilistic metaheuristic algorithm for finding global optima in a large search space. It is inspired by the process of annealing in metallurgy, where a metal is slowly cooled to increase its strength and reduce defects. Simulated annealing works by accepting moves that decrease the objective function with a probability that depends on the current “temperature” of the system, which is gradually decreased over time.

78. What is genetic algorithm?

Answer: Genetic algorithm is a metaheuristic algorithm inspired by the process of natural selection in biological evolution. It involves maintaining a population of potential solutions to a problem, and using a process of selection, recombination, and mutation to create new solutions and evolve the population towards better solutions.

79. What is quicksort algorithm?

Answer: Quicksort is a popular sorting algorithm that uses a divide-and-conquer strategy to sort an array of elements. It works by selecting a pivot element from the array, partitioning the array into two subarrays based on the pivot, and recursively sorting the subarrays. Quicksort has an average case time complexity of O(n log n), making it one of the fastest sorting algorithms in practice.

80. What is merge sort algorithm?

Answer: Merge sort is a popular sorting algorithm that uses a divide-and-conquer strategy to sort an array of elements. It works by dividing the array into two halves, recursively sorting each half, and then merging the two sorted halves together. Merge sort has a worst-case time complexity of O(n log n), making it a good choice for sorting large datasets.

81. What is the difference between quicksort and mergesort?

Answer: Quicksort and mergesort are both popular sorting algorithms that use the divide-and-conquer strategy, but they differ in their approach. Quicksort uses a pivot element to partition the array and sort the subarrays recursively, while mergesort divides the array into two halves and sorts them recursively before merging the sorted halves together. In general, quicksort is faster than mergesort in practice, but mergesort has a more predictable worst-case time complexity.

82. What is dynamic programming used for?

Answer: Dynamic programming is a technique used to solve optimization problems that can be broken down into smaller subproblems. It is often used in problems involving sequence alignment, shortest path finding, knapsack problems, and other optimization problems.

83. What is the difference between a breadth-first search and a depth-first search?

Answer: Breadth-first search and depth-first search are two popular graph traversal algorithms that differ in their approach. Breadth-first search visits all the vertices at a given distance from the starting vertex before moving on to vertices at a greater distance, while depth-first search explores each branch of the graph as far as possible before backtracking. Breadth-first search is guaranteed to find the shortest path in an unweighted graph, while depth-first search can be used to search for a target vertex in a large graph.

84. What is the time complexity of binary search?

Answer: The time complexity of binary search is O(log n), where n is the size of the array being searched. Binary search works by repeatedly dividing the search interval in half, so the number of comparisons needed to find a target element is proportional to the logarithm of the size of the array.

85. What is the time complexity of bubble sort?

Answer: The time complexity of bubble sort is O(n^2), where n is the size of the array being sorted. Bubble sort works by repeatedly swapping adjacent elements that are out of order, so it needs to make O(n^2) comparisons and swaps in the worst case.

86. What is the time complexity of insertion sort?

Answer: The time complexity of insertion sort is O(n^2), where n is the size of the array being sorted. Insertion sort works by iteratively inserting each element in the proper position in a sorted subarray, so it needs to make O(n^2) comparisons and swaps in the worst case.

87. What is the time complexity of selection sort?

Answer: The time complexity of selection sort is O(n^2), where n is the size of the array being sorted. Selection sort works by repeatedly finding the smallest element in the unsorted portion of the array and swapping it with the first unsorted element, so it needs to make O(n^2) comparisons and swaps in the worst case.

88. What is the time complexity of a linear search?

Answer: The time complexity of a linear search is O(n), where n is the size of the array being searched. Linear search works by iterating through each element in the array until it finds the target element, so it needs to make O(n) comparisons in the worst case.]

89. What is the time complexity of a hash table lookup?

Answer: The time complexity of a hash table lookup is O(1) on average, but can be O(n) in the worst case, where n is the number of keys in the table. Hash table lookup works by computing the hash value of a key, which maps it to an index in an array, and then checking if the key is present at that index. In the average case, there is only one key at each index, so the lookup takes constant time. However, in the worst case, all the keys map to the same index, resulting in a linear search through a linked list at that index, which takes O(n) time.

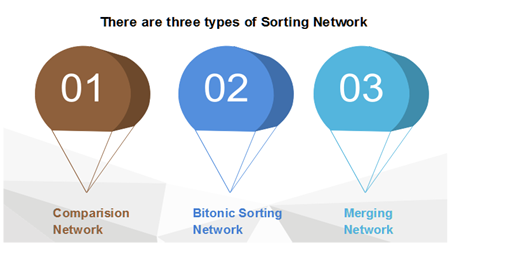

90. What is Sorting Network?

Answer: A sorting network is a numerical representation of a network comprising wires and comparator modules, which is utilized for sorting a sequence of numbers. The comparator modules connect two wires and sort the values by directing the smaller value to one wire and the higher value to the other. The key distinction between sorting networks and comparison sorting algorithms is that the sequence of comparisons is predetermined in a sorting network, irrespective of the outcomes of prior comparisons. This decoupling of comparison series is beneficial for parallel implementation of the algorithms.

91. What is the difference between the Dynamic programming and Greedy method?

| Characteristic | Dynamic Programming | Greedy Method |

| Approach | Bottom-up approach (start from subproblems and build up to solve the main problem) | Top-down approach (start from the main problem and make locally optimal choices) |

| Solution quality | Optimal solution guaranteed | Optimal solution not always guaranteed |

| Subproblem reuse | Subproblems are solved only once and their solutions are stored in a table | Subproblems are not revisited, and the locally optimal choice is made at each step |

| Solution space | Considers all possible solutions | Considers only the locally optimal solution |

| Time complexity | Can have a higher time complexity than Greedy Method | Can have a lower time complexity than Dynamic Programming |

| Suitable problems | Suitable for problems that exhibit optimal substructure and overlapping subproblems | Suitable for problems where making locally optimal choices leads to a global optimal solution |

| Examples | Fibonacci sequence, Knapsack problem | Coin changing problem, Huffman coding |

92. List the advantage of Huffman’s encoding?

Answer: Huffman’s encoding is a crucial file compression technique that provides the following benefits:

- Easy to use

- Flexible

- Implements optimal and minimum length encoding

93. What is the Algorithm’s Time Complexity?

Answer: The time complexity of an algorithm refers to the total amount of time required for the program to run until it finishes.

It is typically expressed using the big O notation.

The algorithm’s time complexity indicates the length of time needed for the program to run entirely.

94. What is a Greedy method in DAA?

Answer: Greedy algorithms solve optimization problems by constructing a solution piece by piece. At each step, they select the next component that provides an immediate benefit without taking prior decisions into account. This method is primarily employed for addressing optimization problems.

95. Can you explain Asymptotic Notation?

Answer: Asymptotic Notation is a mathematical technique used to analyze and describe the behavior of functions as their input size approaches infinity. This notation involves methods whose domains are the set of natural numbers and is useful for defining the worst-case running time function T(n). It can also be extended to the domain of the real numbers.

96. What is the difference between Time Efficiency and Space Efficiency?

Time Efficiency refers to the measure of the number of times the critical algorithm functions are executed, while Space Efficiency calculates the number of additional memory units utilized by the algorithm.

97. Can you provide an overview of how Merge sort works, and can you give an example of its implementation?

Answer: Merge sort is a sorting algorithm that involves dividing the original list into two smaller sub-lists until only one item is left in each sub-list. These sub-lists are then sorted, and the sorted sub-lists are merged to form a sorted parent list. This process is repeated recursively until the original list is completely sorted.

For example, suppose we have an unsorted list of numbers: [5, 2, 8, 4, 7, 1, 3, 6]. The Merge sort algorithm will first divide the list into two sub-lists: [5, 2, 8, 4] and [7, 1, 3, 6]. Each sub-list will then be recursively divided until only one item is left in each sub-list: [5], [2], [8], [4], [7], [1], [3], [6]. These single-item sub-lists are then sorted and merged pairwise to form new sub-lists: [2, 5], [4, 8], [1, 7], [3, 6]. The process continues recursively until the final sorted list is obtained: [1, 2, 3, 4, 5, 6, 7, 8].

98. Can you explain the concept of Huffman code?

Answer: Huffman code refers to a variable-length encoding technique that involves constructing an optimal prefix tree to assign bit strings to characters based on their frequency in a given text.

99. Can you describe dynamic Huffman coding?

Answer: Dynamic Huffman coding involves updating the coding tree every time a new character is read from the source text. It is an improved version of the simplest Huffman coding technique and is used to overcome its limitations.

100. Can you define the n-queen problem?

Answer: The n-queen problem involves placing n queens on an n-by-n chessboard in a way that none of the queens attack each other by being in the same row, column, or diagonal.

The study of DAA algorithms is essential for computer science professionals and software developers as it equips them with critical problem-solving and algorithmic design skills. By understanding the fundamental principles of DAA and familiarizing oneself DAA Interview Questions and Answers, one can confidently navigate complex programming challenges and excel in their career. And follow our Freshersnow website frequently to know more interview questions to get succeed in an technical interview easily.