OS Interview Questions and Answers: In order to assist you in preparing for your upcoming interview, we have provided a list of the Top 100 OS Interview Questions and Answers, which includes the most recent OS interview questions.

★★ Latest Technical Interview Questions ★★

Top 100 OS Interview Questions and Answers 2025

1. What is an operating system and why is it important?

An operating system is a software program that manages computer hardware and software resources and provides common services for computer programs. It is important because it acts as an interface between the user and the computer hardware, and manages the resources of the computer efficiently.

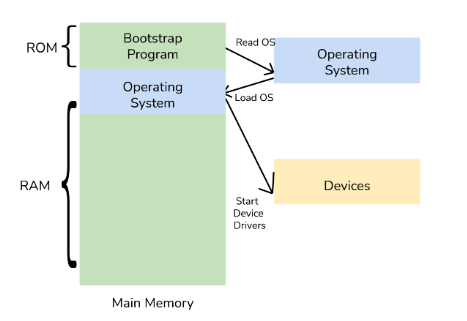

2. What is a bootstrap program in OS?

The bootstrap program, also known as booting, is a software that initializes the operating system during system startup. This program is responsible for loading the OS through a bootstrapping process. The entire operating system relies on the bootstrap program to function and operate effectively. The bootstrap program is stored in the boot blocks at a fixed location on the disk. Its primary function is to locate the kernel and load it into the main memory, after which the program begins its execution.

3. What is the difference between a process and a thread?

| Question | Process | Thread |

|---|---|---|

| Definition | An instance of a running program that consists of one or more threads of execution, an address space, and system resources such as open files and network connections. | A lightweight unit of execution within a process that shares the same memory space and system resources as other threads in the process. |

| Creation | Created by the operating system when a program is launched, or by another process using system calls or APIs. | Created by the process itself using threading libraries or APIs, or by the operating system as part of process creation. |

| Resource Usage | Each process has its own separate memory space, system resources, and scheduling context, which can help ensure stability and security but may also incur overhead. | Threads within a process share the same memory space, system resources, and scheduling context, which can provide efficient communication |

4. Can you explain the concept of virtual memory?

Virtual memory is a technique used by an operating system to allow a computer to use more memory than it physically has available. It creates a virtual address space that can be larger than the physical memory, allowing programs to operate as if there is more memory available.

5. What is a system call, and what is its syntax in Linux?

A system call is a mechanism for a program to request a service from the operating system. In Linux, the syntax of a system call is defined as follows:

long syscall_name(arguments);

6. What is a system call and how does it work?

A system call is a request made by a program to the operating system for a service, such as input/output or memory allocation. It works by executing a software interrupt, which transfers control to the operating system kernel, which then performs the requested service.

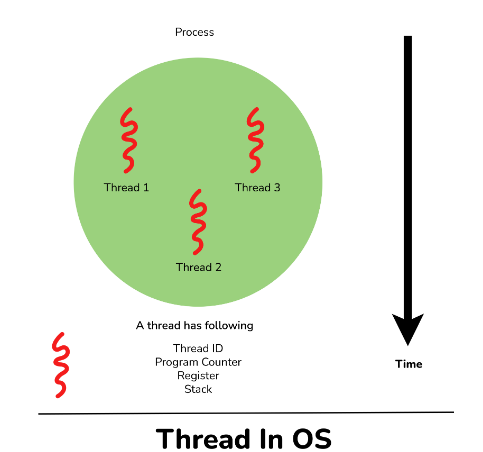

7. What is a thread in OS?

A thread is a sequence of execution that consists of a program counter, thread ID, stack, and a set of registers within a process. It is an essential unit of CPU utilization that makes communication more efficient and effective, enables the use of multiprocessor architectures at a larger scale and with higher efficiency, and reduces the time needed in context switching. It offers a way to improve and enhance application performance through parallelism. Threads are sometimes referred to as lightweight processes since they have their own stack but can access shared data.

In a process, multiple threads that are running share the following: Address space, Heap, Static data, Code segments, File descriptors, Global variables, Child processes, Pending alarms, Signals, and signal handlers.

Each thread has its own unique: Program counter, Registers, Stack, and State.

8. What are the different types of scheduling algorithms used by an operating system?

The different types of scheduling algorithms used by an operating system include First Come First Serve (FCFS), Shortest Job First (SJF), Priority Scheduling, Round Robin, and Multi-level Queue Scheduling.

9. What is a deadlock and how can it be avoided?

A deadlock is a situation where two or more processes are unable to proceed because they are waiting for each other to release resources. It can be avoided by using techniques such as resource allocation graphs, and timeouts, and avoiding the circular wait.

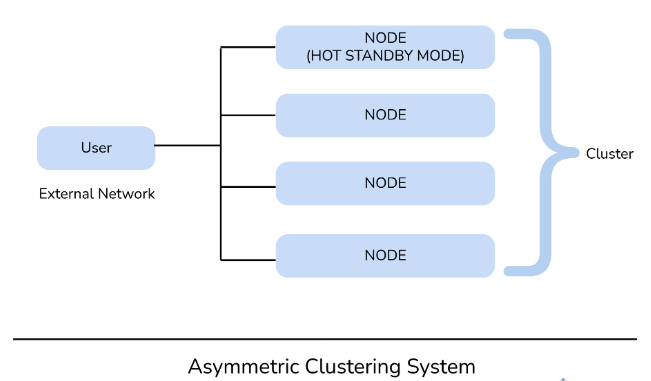

10. What do you mean by asymmetric clustering?

Asymmetric clustering refers to a system in which one node is in hot standby mode while the remaining nodes run various applications. This type of clustering uses the entire hardware resources and is generally considered a highly reliable system.

11. Can you explain the concept of paging and segmentation?

Paging and segmentation are memory management techniques used by an operating system. Paging divides memory into fixed-size pages, while segmentation divides memory into variable-size segments. Both techniques allow for the efficient use of memory.

12. How does an operating system manage I/O devices?

An operating system manages I/O devices by providing device drivers that communicate with the hardware and provide a standard interface for applications to use. It also manages I/O operations by using interrupt-driven I/O, DMA (Direct Memory Access), and buffering.

13. What is the difference between a kernel and a shell?

How do you declare a pointer to a structure in C programming language?

Answer: To declare a pointer to a structure in C, use the following syntax:

struct structure_name *pointer_name;

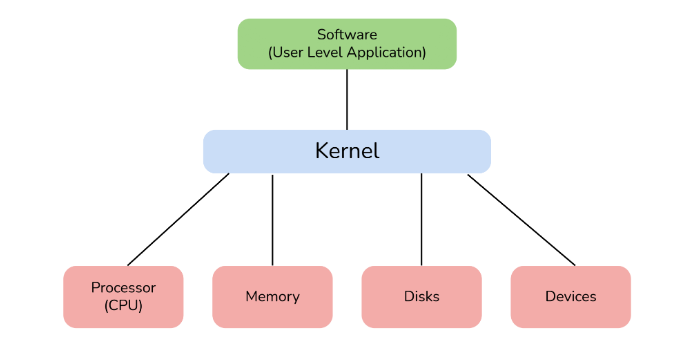

14. What is Kernel and write its main functions?

The kernel is a crucial computer program that serves as the core component or module of an operating system. Its primary function is to manage and control all the operations of the computer system and hardware. It is loaded into the main memory when the system starts and acts as an intermediary between user applications and the computer’s hardware.

Functions of Kernel:

- It is responsible for managing all computer resources such as CPU, memory, files, processes, etc.

- It facilitates or initiates the interaction between components of hardware and software.

- It manages RAM memory so that all running processes and programs can work effectively and efficiently.

15. Can you explain the concept of mutual exclusion?

Mutual exclusion is a technique used by an operating system to ensure that only one process can access a resource at a time. It is achieved through the use of semaphores, locks, and other synchronization mechanisms.

16. What is a file system and how does it work?

A file system is a method used by an operating system to store and organize files on a storage device. It works by dividing the storage into blocks, assigning each block a unique address, and maintaining a directory structure to keep track of files and their locations.

17. How does an operating system handle interrupts?

An operating system handles interrupts by temporarily suspending the current program, saving its state, and executing an interrupt handler routine. The interrupt handler then processes the interrupt and returns control to the interrupted program.

18. Can you explain the concept of cache memory?

Cache memory is a small amount of high-speed memory used by an operating system to store frequently accessed data. It works by copying data from the main memory into the cache memory, allowing the processor to access it more quickly.

19. What is a semaphore and how is it used in synchronization?

A semaphore is a synchronization mechanism used in operating systems to control access to shared resources. It is a signaling mechanism that allows processes or threads to communicate with each other and coordinate their actions.

20. What are the different types of IPC mechanisms?

There are several IPC mechanisms used in operating systems, including shared memory, message passing, pipes, and sockets. Shared memory allows processes to share a portion of memory, while message passing involves sending and receiving messages between processes. Pipes and sockets are used for communication between processes over a network.

21. What is the syntax for creating a new directory in Linux?

To create a new directory in Linux, use the following syntax:

mkdir directory_name

22. Can you explain the concept of a context switch?

A context switch is the process of saving the state of a running process and restoring the state of another process. It is an essential mechanism used by operating systems to allow multiple processes to run concurrently on a single processor.

23. What is a page fault and how is it handled by an operating system?

A page fault occurs when a program tries to access a page of memory that is not currently in physical memory. The operating system handles the page fault by fetching the required page from the disk into physical memory and updating the page table.

24. How does an operating system handle memory allocation and deallocation?

The operating system manages memory allocation and deallocation through a process known as memory management. It allocates memory to processes when they are created and frees up memory when processes are terminated. The operating system also uses techniques such as paging and segmentation to optimize memory usage.

25. Can you explain the concept of race conditions and how they can be avoided?

Race conditions occur when multiple processes or threads access a shared resource simultaneously, resulting in unexpected and incorrect behavior. They can be avoided by using synchronization mechanisms like semaphores, mutexes, and monitors to ensure that only one process or thread can access the shared resource at a time.

26. What is the syntax for creating a symbolic link in Linux?

To create a symbolic link in Linux, use the following syntax:

ln -s source_file target_file

27. What is a process control block and how is it used by an operating system?

A process control block (PCB) is a data structure used by an operating system to store information about a process, such as its current state, priority, and memory allocation. It is used by the operating system to manage and schedule processes, as well as to perform context switching between them.

28. What is the difference between a monolithic kernel and a microkernel?

| Question | Monolithic Kernel | Microkernel |

|---|---|---|

| Definition | A kernel that provides all operating system services, including memory management, process scheduling, device drivers, and file system access, as a single, large executable binary. | A kernel that provides a minimal set of operating system services, such as interprocess communication and memory management, and delegates other services, such as device drivers and file systems, to user-level processes or servers. |

| Design | All services are tightly integrated into the kernel, which can provide high performance and low overhead but may also be more complex and less flexible. | Services are separated from the kernel, which can provide more flexibility and easier maintenance but may also introduce more overhead and reduce performance. |

| Size | Tends to be larger, as all services are included in the kernel binary. | Tends to be smaller, as many services are delegated to user-level processes or servers. |

29. How does an operating system manage user accounts and permissions?

An operating system manages user accounts and permissions by providing mechanisms for creating and managing user accounts, assigning privileges and permissions to users and groups, and controlling access to system resources. This is typically accomplished through the use of access control lists (ACLs) or permissions-based models.

30. Can you explain the concept of virtualization?

Virtualization is the process of creating a virtual version of a computing resource, such as a server, operating system, or network, using the software. This allows multiple virtual instances of the resource to run simultaneously on a single physical machine, providing increased efficiency and flexibility.

31. How do you define a constant variable in C programming language?

To define a constant variable in C, use the following syntax:

const data_type variable_name = value;

32. What is a device driver and how does it work?

A device driver is a software component that allows an operating system to communicate with a hardware device, such as a printer, scanner, or sound card. It works by providing a standard interface between the device and the operating system, allowing the operating system to send commands to the device and receive data from it.

33. How does an operating system handle power management?

An operating system handles power management by providing mechanisms for managing power consumption, such as suspending or hibernating the system when it is not in use, reducing the CPU clock speed, and turning off peripheral devices when they are not in use.

34. Can you explain the concept of distributed systems?

A distributed system is a system in which multiple computers work together to perform a task, often by dividing the task into smaller subtasks that can be executed concurrently. Distributed systems are used in many applications, including web servers, cloud computing, and scientific simulations.

35. What is a system monitor and how is it used by an operating system?

A system monitor is a software tool used by an operating system to monitor the performance and status of system resources, such as CPU usage, memory usage, and network traffic. It is used to diagnose performance issues, identify bottlenecks, and optimize system performance.

36. How does an operating system handle memory fragmentation?

An operating system handles memory fragmentation by using techniques such as memory compaction, where the system rearranges memory to create larger contiguous blocks, or virtual memory, where the system uses disk space as an extension of physical memory.

37. What is the syntax for copying a file in Linux?

To copy a file in Linux, use the following syntax:

cp source_file destination_file

38. Can you explain the concept of thread synchronization?

Thread synchronization is the process of coordinating the execution of multiple threads to ensure that they access shared resources in a safe and predictable manner. This is typically accomplished using synchronization primitives such as locks, semaphores, and barriers.

39. How does an operating system handle process synchronization?

Process synchronization is the process of coordinating the execution of concurrent processes or threads to prevent race conditions, deadlocks, and other conflicts that may arise. Operating systems provide various mechanisms for process synchronization, including locks, semaphores, monitors, and message passing.

40. Can you explain the concept of a virtual machine?

A virtual machine is a software implementation of a computer system that behaves like a physical computer and can run its own operating system and applications. A virtual machine creates an isolated environment that can run multiple operating systems or applications simultaneously on a single physical machine. Virtual machines are often used in cloud computing, where multiple virtual machines can be created on a single physical server to provide computing resources to multiple clients.

41. What is a boot loader and how does it work?

A bootloader is a small program that is loaded into the memory of a computer when it is first turned on. The boot loader is responsible for loading the operating system kernel into memory and starting its execution. The boot loader typically resides in a special location on the disk, known as the boot sector, and is executed by the computer’s firmware.

42. How does an operating system handle input/output operations?

An operating system provides a set of device drivers that are responsible for managing input/output operations. Device drivers are software components that provide a standard interface for communicating with hardware devices such as keyboards, mice, printers, and storage devices.

43. How do you declare an array in Bash scripting?

To declare an array in Bash, use the following syntax:

array_name=(value1 value2 ... valueN)

44. Can you explain the concept of a process scheduler?

A process scheduler is a component of the operating system that is responsible for managing the allocation of CPU time to processes or threads. The process scheduler uses various scheduling algorithms to decide which process or thread should be executed next. The scheduling algorithms used by a process scheduler can be broadly classified into two categories: preemptive and non-preemptive. Preemptive scheduling algorithms allow the operating system to interrupt a running process or thread to allow another process or thread to run.

45. What is a process and how is it different from a program?

A process is a program in execution, with its own memory space, system resources, and scheduling information. It can be thought of as an instance of a program that is being executed by the operating system. While a program is a set of instructions or code that is stored in a file or in memory, waiting to be executed.

46. How does an operating system handle memory protection?

Memory protection is a mechanism that prevents one process from accessing the memory space of another process. An operating system provides memory protection by dividing the memory space into different regions and assigning different access permissions to each region. It also uses hardware-based memory protection features like virtual memory, page tables, and segmentation.

47. Can you explain the concept of process states?

Process states are the different states in which a process can exist during its lifetime. The most common process states are: new, ready, running, waiting, and terminated. A new process is created when a program is executed, it becomes ready when it is waiting to be assigned to a processor, it is running when it is being executed by a processor, waiting when it is waiting for a particular event to occur, and terminated when it has finished executing.

48. What is a mutex and how is it used in synchronization?

A mutex is a synchronization object that is used to protect a shared resource from concurrent access. It allows only one thread or process to access the shared resource at a time, preventing race conditions and ensuring mutual exclusion. A mutex is acquired by a thread or process before accessing the shared resource and released after it is done with the resource.

49. What is the syntax for opening a file in Python?

To open a file in Python, use the following syntax:

file_object = open(file_name, mode)

50. How does an operating system handle process scheduling and dispatching?

Process scheduling is the process of selecting the next process to be executed by the processor. The operating system uses scheduling algorithms to make this decision based on various factors like process priority, CPU burst time, and availability of resources. Once a process is selected, the operating system dispatches it to the processor for execution.

51. What is the difference between preemptive and non-preemptive scheduling?

| Question | Preemptive Scheduling | Non-Preemptive Scheduling |

|---|---|---|

| Definition | A scheduling algorithm in which the operating system can interrupt a running process or task and allocate the CPU to another process with higher priority. | A scheduling algorithm in which a running process or task must explicitly yield the CPU or complete its execution before another process can be scheduled to run. |

| Control | The operating system has control over which process runs at any given time and can interrupt a running process to allow another process to execute. | The running process has control over the CPU until it either completes its execution or explicitly yields the CPU. |

| Priority | Processes are scheduled based on priority, with higher-priority processes preempting lower-priority processes. | Processes are scheduled based on their arrival time, with the first process to arrive being scheduled first. |

52. Can you explain the concept of thread states?

Thread states are the different states in which a thread can exist during its lifetime. The most common thread states are: new, runnable, blocked, and terminated. A new thread is created when a process is created or when a thread is spawned, it becomes runnable when it is ready to be executed by the processor, blocked when it is waiting for a particular event to occur, and terminated when it has finished executing.

53. What is a system call table and how is it used by an operating system?

A system call table is a data structure that contains information about the system calls that can be made by a user program. It is used by the operating system to provide access to system resources and services, like file I/O, process management, and memory allocation. When a user program makes a system call, the operating system looks up the corresponding entry in the system call table and executes the corresponding kernel code.

54. How does an operating system handle device drivers?

Device drivers are software programs that interface with hardware devices and provide an abstraction layer for the operating system to interact with them. The operating system loads device drivers into memory when the corresponding hardware device is detected, and uses them to communicate with the device. The device driver exposes a standardized interface to the operating system, which allows it to interact with the device in a consistent and predictable manner.

55. Can you explain the concept of a system image?

A system image is a snapshot of the state of an operating system, including its kernel, system libraries, drivers, configuration files, and user data. It is used for system backup and recovery, as well as for system deployment and cloning.

56. What is a critical section and how is it used in synchronization?

A critical section refers to a portion of code in a program that should not be executed by more than one thread at the same time to prevent race conditions. A thread can enter a critical section only when it has acquired the necessary lock or semaphore, and other threads have to wait until the first thread releases the lock before they can enter the critical section.

57. How does an operating system handle network protocols?

The operating system handles network protocols by providing a set of networking APIs that allow applications to communicate over the network. The operating system also manages network interfaces, routing tables, and other network-related settings to ensure that data is transmitted and received correctly.

58. Can you explain the concept of a process image?

A process image is a snapshot of a running process that contains all the data and instructions necessary to recreate the process. This includes the program code, data, stack, heap, and system resources used by the process. The processed image is typically used to save the state of a process so that it can be resumed later or transferred to another system.

59. What is the difference between user-level threads and kernel-level threads?

| Question | User-Level Threads | Kernel-Level Threads |

|---|---|---|

| Definition | Threads are managed entirely by a user-level library or application without intervention from the operating system kernel. | Threads are managed by the operating system kernel. |

| Scheduling | Scheduling is performed by the user-level library or application, and the kernel is unaware of the existence of user-level threads. | Scheduling is performed by the kernel, which has knowledge of all threads, including user-level threads. |

| Context Switching | Context switching is fast since it only requires switching user-level contexts. | Context switching can be slower since it involves switching both user-level and kernel-level contexts. |

| Blocking | User-level threads may block the entire process if one thread blocks, as the kernel cannot schedule other threads. | Kernel-level threads can be scheduled independently, so a blocked thread will not block the entire process. |

60. How do you define a function in PowerShell?

To define a function in PowerShell, use the following syntax:

function function_name {

# function body

}

61. What is a page replacement algorithm and how is it used by an operating system?

A page replacement algorithm is a method used by the operating system to choose which pages of memory to evict when the physical memory is full. The goal is to minimize the number of page faults, which occur when a process tries to access a page that is not currently in memory. Popular page replacement algorithms include the Least Recently Used (LRU), First-In-First-Out (FIFO), and Clock algorithms.

62. How does an operating system handle system initialization and shutdown?

During system initialization, the operating system performs a series of checks and configurations to ensure that the hardware and software components are functioning correctly. This includes loading drivers, initializing system resources, and launching system services.

63. Can you explain the concept of a process synchronization barrier?

A process synchronization barrier is a mechanism used in multiprocessing systems to ensure that processes synchronize at specific points in their execution. It is used to prevent race conditions and deadlocks. A barrier is created with a predefined number of processes, and each process waits at the barrier until all processes have arrived, at which point they can proceed to the next stage of execution.

64. What is a scheduling policy and how is it used by an operating system?

A scheduling policy is a set of rules used by the operating system to determine the order in which processes are scheduled to run on the CPU. The policy takes into account factors such as process priority, available resources, and CPU utilization to ensure efficient and fair usage of system resources. Different scheduling policies include first-come, first-served, round-robin, priority-based, and deadline-based scheduling.

65. What is the difference between a deadlock and a livelock?

| Question | Deadlock | Livelock |

|---|---|---|

| Definition | A situation where two or more processes are blocked, waiting for each other to release resources that they need to continue executing. | A situation where two or more processes are actively trying to resolve a conflict, but each process is continually changing its behavior in response to the other process’s behavior, preventing progress. |

| Resource | Caused by the circular dependency between two or more processes, where each process holds a resource that the other processes need to proceed. | Caused by a communication breakdown between processes, where each process is trying to respond to the actions of the other process, but their actions prevent progress. |

| State | Processes are in a blocked state and cannot proceed, even if other resources become available. | Processes are actively trying to resolve the conflict but are unable to make progress, despite available resources. |

66. How does an operating system handle process creation and termination?

When a process is created, the operating system allocates a process control block (PCB) to the process, which contains information about the process, such as its process ID, memory allocation, CPU registers, and state. The operating system also initializes the process with an initial state and assigns system resources, such as memory and I/O devices, to the process. When a process is terminated, the operating system deallocates the resources assigned to the process, frees the PCB, and updates the process status.

67. Can you explain the concept of a virtual file system?

A virtual file system (VFS) is an abstraction layer used by the operating system to provide a unified view of the file system to applications. The VFS allows applications to access files and directories using a standard set of system calls, regardless of the underlying file system used by the operating system. The VFS provides a common interface to file operations, such as opening, closing, reading, and writing files, and provides support for different file system types, such as FAT, NTFS, and ext4.

68. What is a race condition and how is it resolved?

A race condition is a situation that occurs when multiple processes or threads access a shared resource simultaneously and the order of their execution affects the outcome of the program. To resolve a race condition, synchronization mechanisms such as mutexes, semaphores, and barriers can be used to ensure that only one process or thread can access the shared resource at a time

69. How does an operating system handle memory swapping?

When the available physical memory in a system is insufficient to meet the needs of running processes, the operating system can swap out pages of memory from inactive processes to disk, freeing up physical memory for use by other processes. The swapped-out pages are stored in a swap space on disk and can be swapped back into physical memory when needed.

70. What is the difference between a file and a directory?

| Question | Semaphore | Mutex |

|---|---|---|

| Definition | A synchronization object that controls access to a shared resource by multiple processes or threads. | A synchronization object that protects shared resources from simultaneous access by multiple processes or threads. |

| Type | Counting semaphores and binary semaphores. | Only binary mutex is available. |

| Usage | Used to control access to a shared resource with multiple instances available, such as a fixed pool of resources. | Used to protect access to a shared resource with only one instance available, such as a file or a critical section of code. |

| Ownership | Ownership of a semaphore can be shared by multiple processes or threads. | Ownership of a mutex is exclusive, and only the process or thread that acquired it can release it. |

71. What is a page table and how is it used by an operating system?

A page table is a data structure used by the operating system to translate virtual memory addresses used by applications into physical memory addresses used by the CPU. The page table contains entries that map virtual memory pages to physical memory frames and includes information such as access permissions and page status. The operating system uses the page table to manage virtual memory and ensure memory protection.

72. How does an operating system handle system call from user space?

When an application makes a system call, the operating system switches the CPU from user mode to kernel mode and executes the corresponding kernel code to perform the requested operation. The operating system validates the system call parameters, checks the system call table to locate the appropriate kernel function, and executes the function with the required privileges. After the system call is completed, the operating system returns control to the application and switches the CPU back to user mode.

73. Can you explain the concept of a system image backup and restore?

A system image backup is a complete copy of a computer system’s state at a particular point in time, including the operating system, applications, and data. It is used to restore the system to that point in time in case of a failure or disaster. System image backup and restoration are essential for disaster recovery and system maintenance.

74. What is a thread pool and how is it used in multi-threading?

A thread pool is a collection of pre-created threads that are ready to be used for performing a set of tasks. It is used to minimize the overhead of creating and destroying threads repeatedly. When a task is submitted to a thread pool, a thread from the pool is assigned to execute it. Once the task is completed, the thread returns to the pool and becomes available for another task.

75. How does an operating system handle network sockets?

An operating system provides an Application Programming Interface (API) to create, manage and communicate through network sockets. The operating system maintains a table of open sockets that are used by applications to communicate over the network. When an application wants to communicate over the network, it requests a socket from the operating system. The operating system creates a socket and returns a unique identifier to the application, which can then use this identifier to send or receive data over the network.

76. What is the difference between symmetric multiprocessing and asymmetric multiprocessing?

| Question | Symmetric Multiprocessing | Asymmetric Multiprocessing |

|---|---|---|

| Definition | A multiprocessing system where all processors have equal access to memory and other system resources, and can perform any task assigned to them. | A multiprocessing system where each processor is assigned specific tasks or types of tasks, and may have unique access to memory or other system resources. |

| Resource Sharing | All processors share resources, such as memory, I/O devices, and buses, equally. | Each processor may have different access levels to shared resources, depending on their specific tasks. |

| Load Balancing | The workload is balanced across all processors, and tasks can be moved between processors as needed. | The workload is assigned to specific processors based on their capabilities or expertise, and tasks cannot be easily moved between processors. |

77. Can you explain the concept of a process address space?

A process address space is the memory space assigned to a process by the operating system. It contains the program code, data, heap, and stack for the process. Each process has its own address space, which is protected from other processes to maintain security and prevent interference.

78. What is a memory leak and how is it prevented?

A memory leak occurs when a program allocates memory but does not release it after it is no longer needed, causing a gradual loss of available memory. This can cause the program to crash or the operating system to slow down. Memory leaks can be prevented by careful memory management, including ensuring that all memory allocated is eventually freed, using automated memory management techniques like garbage collection, and using tools to detect and fix leaks.

79. What is the difference between virtual memory and physical memory?

| Question | Virtual Memory | Physical Memory |

|---|---|---|

| Definition | A memory management technique that allows a computer to compensate for shortages of physical memory by temporarily transferring pages of data from random access memory (RAM) to disk storage. | The actual physical memory chips are installed on a computer’s motherboard or other hardware devices, such as graphics cards. |

| Management | Managed by the operating system, which maps virtual addresses to physical addresses in real time. | Managed by the computer hardware and the operating system’s memory management unit, which translates virtual addresses to physical addresses. |

| Size | Virtual memory can be much larger than physical memory, as it can include a combination of RAM and disk storage. | Physical memory is limited by the number and size of memory chips installed on a computer’s hardware. |

80. How does an operating system handle memory allocation for kernel space?

The operating system handles memory allocation for kernel space using a variety of techniques, such as paging, virtual memory, and memory protection. When the kernel requires memory, it requests it from the operating system, which assigns it a virtual address in kernel space. The operating system manages the allocation and deallocation of memory in kernel space to prevent conflicts between kernel and user space and to maintain the stability and security of the system.

81. Can you explain the concept of a file descriptor?

A file descriptor is a unique integer value that represents an open file in an operating system. It is used by the operating system to manage the file, including reading and writing to it. The file descriptor can be passed between processes to allow them to communicate and share data through the file.

82. What is a context switch overhead and how is it reduced?

A context switch overhead is the time and resources required to switch between running processes or threads. It can reduce system performance and efficiency. The overhead can be reduced by minimizing the number of context switches required, using efficient scheduling algorithms, and optimizing the use of system resources.

83. What is the difference between multi-tasking and multi-threading?

| Question | Multi-tasking | Multi-threading |

|---|---|---|

| Definition | Refers to the ability of an operating system to run multiple tasks or processes concurrently on a single processor or CPU. | Refers to the ability of a program or process to have multiple threads of execution within it, allowing it to perform multiple tasks concurrently. |

| Focus | Focuses on the execution of multiple tasks or processes simultaneously. | Focuses on the execution of multiple threads within a single process or program. |

| Resources | Multiple tasks share system resources such as CPU time, memory, and I/O devices. | Multiple threads share the same resources within a single process, such as memory and CPU time. |

| Interaction | Tasks are independent and can run concurrently or sequentially, depending on the operating system’s scheduling algorithm. | Threads within a process can interact with each other, sharing data and resources within the same address space. |

84. How does an operating system handle inter-process communication through pipes?

An operating system provides pipes as a means of inter-process communication. Pipes allow one process to send data to another process through a shared memory buffer. The operating system manages the buffer and provides synchronization mechanisms to ensure that the data is transferred correctly and without interference from other processes.

85. How does an operating system handle shared memory segments?

Shared memory is a technique used by an operating system to allow multiple processes to share a region of memory. This can be useful for processes that need to exchange data quickly and efficiently. The operating system provides a shared memory segment that can be accessed by multiple processes simultaneously. Each process maps the shared memory segment into its own address space, allowing it to read and write to the shared memory as if it were its own memory.

86. Can you explain the concept of process affinity and how it is set?

Process affinity is the concept of assigning a process to run on a specific processor or a subset of processors in a multi-processor system. This can be useful in situations where certain processes require dedicated access to specific hardware resources, such as a network card or a graphics processing unit. Process affinity can be set by the operating system using scheduling policies or by the application using system calls or APIs.

87. What is a fork bomb and how is it prevented?

A fork bomb is a type of denial-of-service attack that involves creating a large number of processes in a short period of time, overwhelming the system and causing it to crash or become unresponsive. A fork bomb typically uses a recursive loop that creates new processes until the system resources are exhausted.

88. How does an operating system handle kernel modules?

Kernel modules, also known as device drivers, are used by the operating system to interface with hardware devices such as printers, scanners, and network cards. When a new hardware device is connected to the system, the operating system loads the appropriate kernel module to handle communication with the device.

89. Can you explain the concept of a system call interface?

A system call interface is a set of functions provided by the operating system that allows user applications to interact with the kernel and access system resources such as files, network connections, and hardware devices. System calls provide a standardized way for applications to make requests to the operating system, and the operating system provides a set of responses to those requests.

90. What is a spin lock and how is it used in synchronization?

A spin lock is a type of synchronization mechanism used by operating systems to protect shared resources such as memory or hardware devices from concurrent access by multiple threads or processes. When a thread or process attempts to acquire a spin lock and finds it is already held by another thread or process, it will enter a busy-wait loop that repeatedly checks the status of the lock until it is released.

91. How does an operating system handle real-time tasks?

Real-time tasks are those that have strict timing requirements, such as those found in industrial control systems or multimedia applications. To handle real-time tasks, the operating system must provide mechanisms for scheduling and prioritizing these tasks over other tasks running on the system.

92. What is the difference between process and thread?

| Process | Thread |

|---|---|

| An independent program execution unit | A lightweight execution unit within a process |

| Contains its own memory, system resources, and state | Can share resources and memory with other threads within the same process |

| Executes independently of other processes | Executes concurrently with other threads within the same process |

| Switching between processes is slower due to context switching | Switching between threads is faster due to shared resources and lighter context switching |

| Requires inter-process communication for data sharing | Data sharing is easier and faster as threads share the same memory space |

| Can have multiple threads within a process | A process can have one or more threads |

| Example: A web browser or a media player | Example: A thread for handling user input or a thread for background tasks |

93. What are the different states of a process?

- New Process

- Running Process

- Waiting Process

- Ready Process

- Terminated Process

94. What are the different operating systems?

- Batched operating systems

- Distributed operating systems

- Timesharing operating systems

- Multi-programmed operating systems

- Real-time operating systems

95. What are two types of semaphores?

- Binary semaphores

- Counting semaphores

96. What are the different types of Kernel?

- Monolithic Kernel

- MicroKernel

- Hybrid Kernel

- Nano Kernel

- Exo Kernel

97. What is SMP (Symmetric Multiprocessing)?

SMP (Symmetric Multiprocessing) is a type of computer architecture that uses multiple processors or CPUs to execute tasks in parallel. In an SMP system, all processors have equal access to memory and other resources, and each processor can execute any task. SMP is commonly used in high-performance computing environments, where multiple processors can significantly improve performance and increase the throughput of computing systems.

98. What is Context Switching?

Context switching is a process that occurs when a computer’s operating system switches the CPU from running one process or thread to another. The OS saves the current state of the running process, including its memory contents and CPU registers, and loads the saved state of the new process or thread. Context switching is a fundamental aspect of multitasking operating systems and is critical for efficient CPU utilization and sharing.

99. What is spooling in OS?

Spooling in OS stands for Simultaneous Peripheral Operations Online, and it is a technique used to improve the performance of printers and other input/output devices. Spooling involves storing data in a buffer or temporary file before it is sent to the printer or device. This technique allows the OS to free up the application from waiting for the device to finish its current task and enables the application to continue executing while the device completes its operation in the background. This can significantly improve system performance and efficiency.

100. Where is Batch Operating System Used in Real Life?

They are used in Payroll System and for generating Bank Statements.

The collection of the Top 100 OS interview questions and answers can be an excellent resource for those seeking to excel in their interviews for jobs related to operating systems. If you are seeking further learning opportunities, you may consider following the website freshersnow.com., which offers a variety of resources for career development and advancement.