If you are preparing for a software testing interview, whether you are an experienced professional or a fresher, this inclusive compilation of the Top 100 Software Testing Interview Questions and Answers will equip you with the necessary knowledge and confidence to excel in your next interview. Let’s delve into the Software Testing Interview Questions for Freshers to help you prepare.

★★ Latest Technical Interview Questions ★★

Top 100 Software Testing Interview Questions and Answers

1. What is software testing, and why is it important?

Software testing is a process of evaluating a software product or application to detect any errors, bugs, or defects. It is essential to ensure that the software meets the requirements, functions as intended, and is of high quality. Effective testing helps to improve the reliability, performance, and security of the software, which leads to increased customer satisfaction.

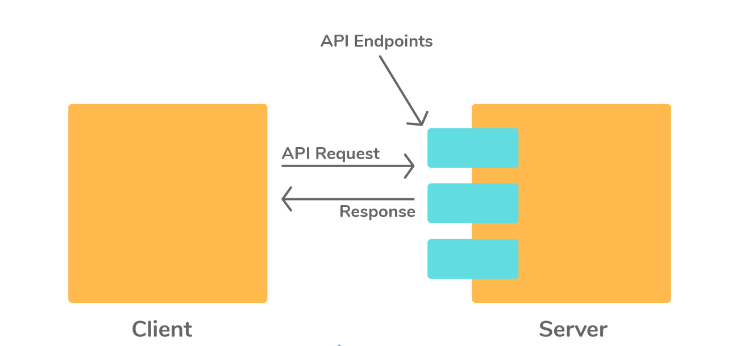

2. What is an API?

- APIs can also be used to integrate different software components, services, or systems. For example, if you are building an e-commerce website, you may need to integrate payment gateways, shipping providers, and inventory management systems. APIs can be used to integrate all these different systems together, allowing them to communicate with each other seamlessly.

- APIs are critical in today’s technology landscape as they enable businesses to share and exchange data and services more efficiently. This has led to the development of many web and mobile applications that rely on APIs to function. Many companies also use APIs to create new revenue streams by providing access to their data or services to third-party developers.

3. What are the different types of software testing?

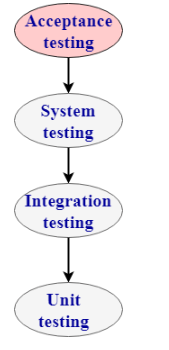

There are several types of software testing, including:

- Unit testing

- Integration testing

- System testing

- Acceptance testing

- Regression testing

- Performance testing

- Security testing

- Usability testing

- Compatibility testing

- Exploratory testing

4. Can you explain the difference between functional testing and non-functional testing?

Functional testing is used to ensure that the software meets the functional requirements and performs as expected. Non-functional testing, on the other hand, is used to evaluate the non-functional aspects of the software, such as its performance, security, usability, compatibility, and reliability.

5. What is the difference between manual testing and automated testing?

Manual testing is a process of manually testing a software application without using any automation tools. It involves executing test cases manually and checking the results against the expected outcomes. Automated testing, on the other hand, is a process of using automation tools to execute test cases automatically. It involves creating test scripts and running them with the help of automation tools

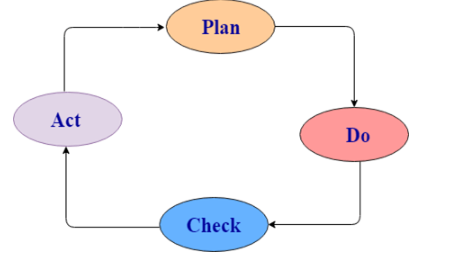

6. What is the PDCA cycle and where testing fits in?

- PDCA stands for Plan, Do, Check, Act.

- Plan defines the goal and the strategy to achieve it.

- Do/Execute is the stage where the plan is put into action.

- Check is the testing phase to ensure that the project is progressing according to plan and yielding desired results.

- The act involves taking appropriate action to address any issues found during the check phase and revising the plan accordingly.

- PDCA is a continuous improvement cycle that helps teams to identify areas of improvement and implement changes for better results.

- This cycle can be applied to various fields, including software development, business, and management.

- The PDCA cycle can help teams to minimize errors, reduce risks, and optimize their processes for better outcomes.

7. What are the advantages and disadvantages of using automated testing?

Advantages:

- Faster and more efficient than manual testing.

- More reliable and consistent.

- Reusable and scalable.

- Allows for more thorough testing.

- Provides detailed reports and metrics

Disadvantages:

- Initial setup and maintenance can be time-consuming.

- Cannot replace the need for human testing entirely.

- Requires technical expertise to create and maintain automated tests.

- Not suitable for testing certain types of software, such as user interfaces or graphics-heavy applications.

8. Can you explain the difference between keyword-driven testing and data-driven testing?

- Keyword-driven testing is a testing technique where the test cases are designed and executed based on keywords or action words, which are associated with specific test steps.

- Data-driven testing is a testing technique where the test cases are designed and executed based on a set of input data, which is used to test the software under different scenarios.

9. How do you handle test data in automated testing?

- Test data should be stored separately from the test scripts and managed through a dedicated test data management system.

- Test data should be carefully selected and designed to cover a wide range of scenarios and edge cases.

- Test data should be regularly updated and maintained to ensure accuracy and relevance.

10. What is acceptance testing?

- User acceptance testing (UAT) is performed after the product is tested by the testers to determine if it satisfies user needs, requirements, and business processes.

- Operational acceptance testing (OAT) is performed before the product is released in the market but after UAT.

- Contract and regulation acceptance testing involves testing the system against criteria set in a contract or government regulations.

- Alpha testing is performed in the development environment before release and feedback is taken from alpha testers to improve the product.

- Beta testing is performed in the customer environment, with customers providing feedback to improve the product.

11. Can you explain the concept of a test harness?

A test harness is a set of tools, procedures, and protocols used to run and manage automated tests. It provides a framework for executing tests, collecting and analyzing results, and reporting on the overall testing process.

12. What is the difference between a test script and a test case?

- A test script is a set of instructions or code used to automate the testing process.

- A test case is a set of steps or instructions used to test a specific functionality or feature of the software.

13. Can you explain the concept of test data management?

Test data management is the process of collecting, storing, and managing test data used in automated testing. It involves selecting and designing relevant test data, managing data sources, and maintaining data integrity.

14. Can you explain the concept of code branching?

Code branching is a software development practice where code changes are managed through separate branches or versions, to enable parallel development and testing.

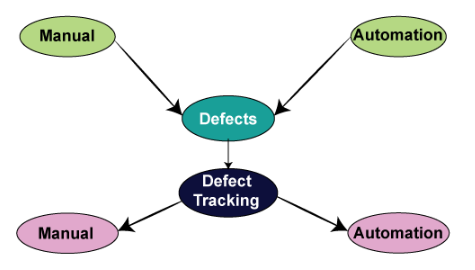

15. How to track the bug manually and with the help of automation?

- Identify the bug.

- Make sure that it is not duplicated (that is, check it in the bug repository).

- Prepare a bug report.

- Store it in the bug repository.

- Send it to the development team.

- Manage the bug life cycle (i.e., keep modifying the status).

We have various bug-tracking tools available in the market, such as:

- Jira

- Bugzilla

- Mantis

- Telelogic

- Rational Clear Quest

- Bug_track

- Quality center

16. How do you ensure that your automated tests are maintainable?

- Use a modular and scalable testing framework.

- Write clean, organized, and easy-to-understand test scripts.

- Use descriptive and meaningful test case names and comments.

- Regularly review and refactor test code to remove redundancy and improve readability.

17. Can you explain the concept of continuous integration?

Continuous integration is a software development practice where code changes are regularly integrated and tested, to detect and resolve defects early in the development process.

18. How do you ensure that your automated tests are reliable?

- Use a robust and well-designed testing framework.

- Use relevant and accurate test data.

- Regularly review and maintain test scripts.

- Monitor test results and metrics to detect and resolve issues.

19. Can you explain the concept of risk-based testing?

Risk-based testing is a testing approach where testing efforts are focused on the most critical and high-risk areas of the software. It involves identifying potential risks, assessing their likelihood and impact, and prioritizing testing efforts accordingly.

20. Can you explain the difference between a test stub and a test driver?

- A test stub is a piece of code used to simulate a software component that the code being tested depends on.

- A test driver is a piece of code used to execute and control the test cases.

21. What is a test case, and how do you create one?

A test case is a document that describes the steps to be followed to test a specific functionality of a software application. It includes the preconditions, input data, expected results, and post-conditions. To create a test case, you need to identify the functionality to be tested, define the input data and expected results, and document the steps to be followed to test the functionality.

22. Can you explain the difference between a test plan and a test strategy?

A test plan is a document that outlines the approach, scope, objectives, and timelines for testing a software application. It includes details such as the testing types, test environment, testing tools, and test schedules. A test strategy, on the other hand, is a high-level document that outlines the overall approach to testing a software application. It includes details such as the testing methodology, testing types, and test environment.

23. What is the difference between functional testing and non-functional testing in software testing?

| Functional Testing | Non-Functional Testing |

|---|---|

| Tests whether the software meets its functional requirements | Tests how well the software performs non-functional requirements |

| Focuses on what the software does | Focuses on how the software does it |

| Examples: Unit testing, integration testing, system testing, acceptance testing | Examples: Performance testing, security testing, usability testing, compatibility testing |

24. How do you prioritize which test cases to execute?

Test cases can be prioritized based on several factors such as the criticality of the functionality being tested, the risk associated with the functionality, and the frequency of use of the functionality. The prioritization should be based on the business requirements, customer needs, and the overall objectives of the software application.

25. Can you explain the V-model of software testing?

The V-model of software testing is a software development model that emphasizes the importance of testing at each stage of the software development life cycle. The model consists of two branches – the left branch represents the development process, while the right branch represents the testing process. The testing activities are mapped to the corresponding development activities, and testing is performed at each stage to ensure that the software meets the specified requirements.

26. What is a regression test, and when is it used?

A regression test is a type of testing that is performed to ensure that changes or modifications to the software do not introduce new bugs or defects. It involves re-executing previously passed test cases to ensure that the existing functionality has not been impacted by the changes. Regression testing is typically used when new features are added, defects are fixed, or changes are made to the software.

27. What is exploratory testing, and when is it used?

Exploratory testing is a type of software testing approach where the tester designs and executes tests based on their knowledge, experience, and intuition, without relying on pre-defined test cases or scripts. It is used when the requirements are unclear or changing frequently, and when the tester needs to find defects quickly.

28. How do you measure the effectiveness of your testing efforts?

The effectiveness of testing efforts can be measured by various metrics such as code coverage, defect detection rate, and user satisfaction. Code coverage measures the percentage of the codebase that has been tested, while defect detection rate measures the number of defects found per unit of time or effort. User satisfaction can be measured through surveys or feedback mechanisms.

29. What is boundary value testing and how is it useful in software testing?

Boundary value testing is a technique used in software testing to evaluate the behavior of a system at its limits. It involves testing input values at the boundaries of their valid ranges to ensure that the system handles them correctly. For example, if the valid input range for a field is 1-10, boundary value testing would test inputs of 1, 10, and values just outside that range.

30. How does black-box testing differ from white-box testing in software testing?

| Black-box testing | White-box testing |

|---|---|

| Tests the functionality of the software without looking at the internal structure of the code | Tests the internal structure of the code to ensure it meets its design and quality requirements |

| Test cases are designed based on the software’s specifications and requirements | Test cases are designed based on the internal structure and logic of the code |

| Testers are not required to have knowledge of the code or its implementation | Testers require knowledge of the code and its implementation |

| Examples: Functional testing, integration testing, acceptance testing | Examples: Unit testing, code coverage testing, static testing |

31. How do you determine which test cases to automate?

We determine which test cases to automate by evaluating factors such as the frequency of testing, the complexity of the tests, and the time it takes to execute them manually. Tests that are executed frequently, require complex data or input, and take a long time to execute are ideal candidates for automation.

32. What is exploratory testing, and how does it differ from scripted testing?

Exploratory testing is a software testing technique where the tester simultaneously designs, executes and evaluates tests. It involves learning about the application under test by exploring its features and finding defects that might not be caught by scripted testing. Scripted testing, on the other hand, involves following pre-written test cases to verify the expected behavior of the application.

33. What are the key differences between regression testing and smoke testing?

| Regression testing | Smoke testing |

|---|---|

| Tests the existing functionalities of the software after changes or updates have been made | Tests the critical functionalities of the software to ensure it is stable enough to proceed with further testing |

| Aims to detect any unexpected changes or defects in the software after changes have been made | Aims to detect any critical failures or showstopper defects before proceeding with further testing |

| Usually performed after a major change or update | Usually performed before the start of formal testing |

| Examples: Retesting, sanity testing, confirmation testing | Examples: Build verification testing, acceptance testing |

34. What is regression testing, and why is it important?

Regression testing is a type of software testing that checks whether changes to the software have introduced new defects or caused existing defects to resurface. It is important because it helps ensure that changes made to the software do not negatively impact its functionality or performance.

35. What is the difference between functional testing and non-functional testing?

Functional testing checks the functionality of the software, whereas non-functional testing checks non-functional aspects of the software, such as performance, usability, security, and compatibility.

36. What is the difference between smoke testing and sanity testing?

Smoke testing is a type of testing that is done to ensure that the most important functionalities of the software are working fine before performing detailed testing. Sanity testing, on the other hand, is a subset of regression testing, which checks whether the changes made to the software have not adversely affected its functionality.

37. What distinguishes performance testing from stress testing in software testing?

| Performance testing | Stress testing |

|---|---|

| Tests how well the software performs under normal or expected conditions | Tests how well the software performs under extreme or abnormal conditions |

| Aims to optimize the software’s response time, speed, and scalability | Aims to identify the software’s breaking point or failure threshold |

| Examples: Load testing, volume testing, endurance testing | Examples: Spike testing, soak testing |

38. What is static testing, and how is it different from dynamic testing?

Static testing is a software testing technique that does not involve running the software but instead involves reviewing the code, documentation, and requirements. It helps identify defects early in the development process. Dynamic testing, on the other hand, involves executing the software to find defects.

39. What is the difference between positive and negative testing?

Positive testing checks whether the software behaves as expected when given valid inputs, while negative testing checks whether the software handles invalid or unexpected inputs correctly.

40. What is end-to-end testing, and how is it different from integration testing?

End-to-end testing is a type of software testing that evaluates the performance of the entire system from start to end. It checks how different components of the software interact with each other and if they meet user requirements. Integration testing, on the other hand, focuses on testing how different modules or components of the software interact with each other.

41. In software testing, what is the difference between manual testing and automated testing?

| Manual testing | Automated testing |

|---|---|

| Tests are performed manually by a tester | Tests are performed using automated testing tools and scripts |

| Tests are typically slower, more time-consuming, and prone to human error | Tests are typically faster, more efficient, and less prone to human error |

| Usually performed for exploratory or usability testing | Usually performed for regression or performance testing |

| Examples: Exploratory testing, usability testing, ad-hoc testing | Examples: Unit testing, regression testing, performance testing |

42. What is the difference between validation and verification in software testing?

Validation is the process of ensuring that the software meets the user’s requirements and expectations. Verification is the process of ensuring that the software meets its specified requirements and works as expected.

43. What is a test suite, and how is it used?

A test suite is a collection of test cases that are designed to test specific functionality or features of the software. It is used to automate testing and to ensure that all the tests are executed consistently and thoroughly.

44. Can you explain the difference between black-box testing and white-box testing?

Black-box testing is a testing technique where the tester focuses on the external behavior of the software, without having knowledge of its internal workings. White-box testing, on the other hand, involves testing the software with knowledge of its internal code and structure.

45. What is the difference between positive testing and negative testing?

Positive testing is a testing technique where the tester validates that the software behaves correctly when given valid inputs or actions. Negative testing, on the other hand, involves testing the software with invalid inputs or actions to validate that it handles errors correctly.

46. What sets apart acceptance testing from system testing in software testing?

| Acceptance testing | System testing |

|---|---|

| Tests whether the software meets the user’s requirements and expectations | Tests whether the software meets the system requirements and specifications |

| Typically performed by the end-users or stakeholders | Typically performed by the testing team |

| Usually the final stage of testing before the release | Performed after integration testing and before acceptance testing |

| Examples: User acceptance testing (UAT), alpha testing, beta testing | Examples: Integration testing, regression testing, performance testing |

47. Can you explain the concept of equivalence partitioning?

Equivalence partitioning is a technique where the input data is divided into groups or partitions based on the expected behavior of the software. This helps the tester to identify the minimum number of test cases needed to cover all possible scenarios.

48. Can you explain the concept of boundary value analysis?

Boundary value analysis is a technique where the tester focuses on the boundaries or limits of input values, as they are likely to be the source of errors. By testing inputs at the minimum, maximum, and just beyond these boundaries, the tester can identify defects related to boundary conditions.

49. What is a defect, and how do you report one?

A defect is an error or flaw in the software that causes it to behave unexpectedly or incorrectly. Defects can be reported through a defect tracking system, which provides a mechanism for recording, prioritizing, and resolving defects.

50. Can you explain the difference between a defect and a bug?

A bug is another term for a defect, and the two terms are often used interchangeably.

51. How do you triage defects, and what factors do you consider?

To triage defects means to prioritize them based on their severity, impact, and likelihood of occurrence. Factors that are considered include the severity of the defect, the affected functionality, the number of users affected, and the frequency of occurrence.

52. Can you explain the difference between verification and validation?

Verification is the process of ensuring that the software meets the specified requirements and design, while validation is the process of ensuring that the software meets the needs of the users and the business.

53. What are the differences between exploratory testing and scripted testing in software testing?

| Exploratory testing | Scripted testing |

|---|---|

| Tests are performed without a predefined test plan or script | Tests are performed using a predefined test plan or script |

| Tester has more freedom to explore the software and try different scenarios | Tester follows a predetermined set of steps and tests |

| Typically performed during the early stages of testing or for ad-hoc testing | Typically performed during regression testing or for compliance testing |

| Results in more diverse and creative testing | Results in more consistent and repeatable testing |

| Examples: Ad-hoc testing, usability testing, compatibility testing | Examples: Regression test |

54. Can you explain the difference between static testing and dynamic testing?

Static testing involves reviewing the software documentation, design, and code without executing it, while dynamic testing involves executing the software and observing its behavior.

55. What is load testing, and how is it performed?

Load testing is a type of performance testing that involves testing the software under high load or stress conditions, to identify performance bottlenecks or defects. It is performed by simulating a large number of users or requests and monitoring the software’s response time and resource utilization

56. What is stress testing, and how is it performed?

Stress testing is a type of testing that is used to evaluate the stability and reliability of a software application under extreme conditions such as high load, high traffic, or heavy usage. It is performed by simulating high volumes of user traffic or data to identify the breaking point or maximum capacity of the application. The objective of stress testing is to identify any performance issues, memory leaks, or system failures that may occur under high-load conditions.

57. Can you explain the concept of concurrency testing?

Concurrency testing is a type of testing that is used to evaluate the performance and behavior of a software application under multiple concurrent users or transactions. It involves simulating multiple users or transactions to test the system’s ability to handle concurrent requests and identify any performance bottlenecks or synchronization issues. The objective of concurrency testing is to ensure that the application can handle multiple users or transactions without any performance degradation or data corruption.

58. What is the distinction between load testing and volume testing in software testing?

| Load testing | Volume testing |

|---|---|

| Tests how well the software performs under normal or expected loads | Tests how well the software handles large amounts of data |

| Aims to optimize the software’s response time, speed, and scalability | Aims to identify performance and memory usage issues |

| Examples: Stress testing, endurance testing | Examples: Big data testing, database testing |

59. What is acceptance testing, and when is it performed?

Acceptance testing is a type of testing that is performed to ensure that a software application meets the business requirements and satisfies the needs of the end-users. It is typically performed at the end of the software development life cycle and involves validating the software against the acceptance criteria. The objective of acceptance testing is to gain confidence that the software is ready for deployment and meets the expectations of the stakeholders.

60. What is usability testing, and when is it performed?

Usability testing is a type of testing that is used to evaluate the ease of use, efficiency, and effectiveness of a software application from a user’s perspective. It involves testing the application with a group of end-users and observing their interactions and feedback. The objective of usability testing is to identify any usability issues or areas of improvement that may impact the user experience.

61. What is performance testing, and when is it performed?

Performance testing is a type of testing that is used to evaluate the responsiveness, speed, scalability, and stability of a software application under different load conditions. It involves simulating real-world scenarios and measuring the application’s response time, throughput, and resource utilization. The objective of performance testing is to identify any performance issues or bottlenecks that may impact the application’s performance.

62. How does compatibility testing differ from integration testing in software testing?

| Compatibility testing | Integration testing |

|---|---|

| Tests whether the software can run on different hardware, software, and environments | Tests how well the different modules or components of the software work together |

| Ensures the software can operate across different platforms, browsers, and devices | Ensures the software is integrated and functions as a whole |

| Examples: Browser compatibility testing, hardware compatibility testing | Examples: API testing, unit testing, system testing |

63. Can you explain the concept of test coverage?

Test coverage is a measure of the percentage of code or functionality that is covered by the test cases. It is used to determine the effectiveness of the testing and to identify any areas of the software that have not been tested. Test coverage is typically measured by using code coverage tools or test management tools.

64. What is a code review, and how is it performed?

A code review is a process of reviewing the source code to identify any coding errors, defects, or quality issues. It is typically performed by a team of developers or software engineers and involves reviewing the code for readability, maintainability, and adherence to coding standards. Code reviews can be performed manually or using code review tools.

65. What is code coverage, and how is it measured?

Code coverage is a measure of the percentage of code that has been executed by the test cases. It is used to determine the effectiveness of the testing and to identify any areas of the software that have not been tested. Code coverage can be measured using code coverage tools that analyze the code and report on the percentage of code covered by the test cases.

65. Can you explain the difference between verification and validation?

Verification is the process of evaluating the software to ensure that it meets the specified requirements and conforms to the design and development standards. Validation, on the other hand, is the process of evaluating the software to ensure that it meets the needs of the end-users and satisfies the business requirements.

66. Can you explain the difference between quality assurance and quality control?

Quality assurance is a process of ensuring that the software development process is effective, and efficient, and produces high-quality software. It involves defining and implementing quality standards, processes, and procedures to prevent defects and ensure that the software meets the specified requirements. Quality control, on the other hand, is a process of evaluating the software to ensure that it meets the quality standards and conforms to the specified requirements. It involves identifying and correcting defects and ensuring that the software meets the desired level of quality.

67. What are the key differences between usability testing and accessibility testing in software testing?

| Usability testing | Accessibility testing |

|---|---|

| Tests whether the software is easy to use, navigate, and understand for the user | Tests whether the software is accessible and usable for people with disabilities |

| Aims to improve user experience and satisfaction | Aims to improve the software’s compliance with accessibility standards |

| Examples: User testing, A/B testing, focus group testing | Examples: Screen reader testing, color contrast testing, keyboard-only testing |

68. What is the difference between a defect and a failure?

A defect is a flaw or imperfection in the software code or design that can cause the software to behave unexpectedly or produce incorrect results. A failure, on the other hand, is the actual occurrence of the defect when the software is executed. In other words, a defect is a potential problem in the software, while a failure is an actual problem that has occurred.

69. Can you explain the concept of test automation?

Test automation is the process of using software tools to automate manual testing processes, such as test case creation, test execution, and result analysis. It involves using automation tools to simulate user interactions, generate test data, and compare actual results with expected results. Test automation is typically used to increase testing efficiency, reduce testing time, and improve testing accuracy. However, not all testing can be automated, and manual testing is still required in many cases.

70. What is a defect life cycle, and how does it work?

The defect life cycle is a series of stages that a defect or bug goes through from discovery to resolution. The typical stages include:

- New: The defect is identified and logged in a tracking system.

- Open: The defect is reviewed by the development team to confirm it is valid.

- Assigned: The defect is assigned to a developer for investigation and resolution.

- In progress: The developer is actively working on fixing the defect.

- Fixed: The defect has been fixed by the developer and is ready for testing.

- Retest: The fixed defect is tested to confirm that it has been resolved.

- Closed: The defect is confirmed as resolved and closed in the tracking system.

71. Can you explain the concept of a test environment?

A test environment is a dedicated setup of hardware, software, and network configurations used to conduct testing of a software application or system. It can include servers, databases, operating systems, virtual machines, and other components necessary for testing. The test environment is designed to replicate the production environment as closely as possible to ensure that the application or system performs as expected in the real world.

72. Can you explain the concept of a test plan?

A test plan is a document that outlines the approach, objectives, scope, and resources required for testing a software application or system. It includes details such as test strategies, test scenarios, test cases, test schedules, and resources allocated to testing. A test plan serves as a roadmap for the testing effort and ensures that testing is conducted thoroughly and effectively.

73. What is a defect tracking system, and how does it work?

A defect tracking system is a software tool that manages and tracks defects or bugs identified during the testing of a software application or system. It enables developers to log, prioritize, assign, and track the status of defects as they progress through the defect life cycle. The tracking system typically includes features for reporting, searching, and analyzing defect data to identify trends, common issues, and areas for improvement.

74. How do you handle a situation where a defect is not reproducible?

If a defect cannot be reproduced, it may be due to a variety of factors such as an intermittent issue, a specific environment or data set, or a configuration issue. To handle such a situation, the tester should:

- Gather as much information about the defect as possible, including steps to reproduce, the system configuration, and any error messages or logs.

- Try to replicate the defect on different machines or environments.

- Consult with the development team to investigate possible causes and solutions.

- Consider whether the defect is critical enough to delay the release or whether it can be deferred to a future release. Can you explain the concept of the test automation framework?

75. What is the difference between smoke testing and sanity testing?

Smoke testing is a type of testing that verifies that the critical or most important features of an application or system are working as expected. It is usually performed after a build or release to quickly identify any major issues that could prevent further testing or deployment.

76. What is a test oracle, and how is it used?

A test oracle is a mechanism or a set of procedures used to determine whether the output of a software program is correct. It’s typically used to compare the expected result of a program with its actual output. The oracle can be manual or automated, and it can be based on formal specifications, domain knowledge, or other sources of information.

77. Can you explain the concept of negative testing?

Negative testing is a software testing technique in which the system or application is tested with invalid or unexpected inputs or conditions. The purpose of negative testing is to ensure that the system can handle error conditions gracefully, and does not crash or behave unpredictably.

78. How do you handle a situation where the software behaves differently on different platforms?

To handle a situation where the software behaves differently on different platforms, you can perform cross-platform testing to identify the root cause of the problem. This involves testing the software on different operating systems, hardware, and network configurations to determine which platform(s) are causing the issue. Once you identify the problem platform(s), you can investigate further to find a solution.

79. Can you explain the concept of test-driven development?

Test-driven development (TDD) is a software development approach in which tests are written before the code is implemented. The idea is to write a failing test case that describes a desired behavior or feature, and then write the minimum amount of code needed to make the test pass. This process is repeated iteratively, with additional tests being added as needed to ensure that the code works as expected.

80. How do you ensure that your test cases are complete?

To ensure that your test cases are complete, you can use techniques such as boundary value analysis, equivalence partitioning, and decision table testing to identify all possible test scenarios. You can also use code coverage analysis tools to ensure that all parts of the code are tested. Additionally, you can involve stakeholders such as developers, testers, and users in the testing process to identify additional test cases.

81. Can you explain the difference between performance testing and load testing?

Performance testing is a type of testing that evaluates how well a system performs under different workloads and conditions, such as high user traffic, large data sets, or peak usage periods. Load testing is a subset of performance testing that focuses specifically on measuring the system’s ability to handle a high volume of users or transactions. The goal of load testing is to identify the system’s capacity limits and potential bottlenecks under heavy load.

82. How do you handle a situation where the software is not behaving as expected?

To handle a situation where the software is not behaving as expected, you can perform debugging and troubleshooting to identify the root cause of the problem. This involves analyzing the code, logs, and other system data to determine where the problem is occurring and what is causing it. Once you identify the root cause, you can develop a fix and test it to ensure that it resolves the issue.

83. Can you explain the concept of continuous testing?

Continuous testing is a software testing approach that involves testing throughout the development process, from the initial design phase to the final release. The goal of continuous testing is to identify and fix issues early in the development cycle, rather than waiting until the end of the cycle to perform testing. This involves using automated testing tools and techniques, such as unit testing, integration testing, and regression testing, to ensure that the code is functioning as expected at every stage of the development process.

84. Can you explain the concept of code coverage analysis?

Code coverage analysis is a technique used to measure the degree to which source code is executed during testing. It involves analyzing the code to determine which statements, branches, or paths are covered by the test cases and which are not. This information is used to identify areas of the code that are not being tested adequately and to improve the quality of the testing effort.

85. How do you measure the performance of an automated test suite?

There are several metrics that can be used to measure the performance of an automated test suite, including:

- Test execution time: The time it takes to execute the entire test suite.

- Test coverage: The percentage of the application or system that is covered by the test suite.

- Test pass rate: The percentage of tests that pass without error.

- Test failure rate: The percentage of tests that fail due to defects or errors.

- Test maintenance effort: The time and effort required to maintain the test suite.

86. What is the difference between a test scenario and a test case?

- A test scenario is a high-level description of a testing activity that outlines the objectives, assumptions, and expected results of the test. It typically describes a business process or a user interaction and is used to identify the scope of testing and to guide the creation of test cases.

- A test case, on the other hand, is a detailed description of a specific test that includes inputs, actions, and expected outputs. It provides step-by-step instructions for executing the test and verifying the results.

87. What is the difference between a use case and a test case?

A use case is a description of a business process or a user interaction that describes how the system should behave in a specific scenario. It is used to capture requirements and to guide the development of the system. A test case, as mentioned earlier, is a detailed description of a specific test that includes inputs, actions, and expected outputs. It is used to verify that the system behaves as intended and meets the requirements specified in the use cases.

88. Can you explain the concept of API testing?

API testing is a type of testing that focuses on testing the application programming interfaces (APIs) of a software application or system. It involves testing the inputs and outputs of the API to ensure that it behaves as expected and meets the functional and non-functional requirements. API testing can be done manually or through automation tools and can include testing for performance, security, and reliability.

89. Can you explain the concept of pair-wise testing?

Pair-wise testing is a software testing technique in which all possible pairs of input values are tested together to identify any defects or issues that may arise when multiple inputs are used in combination. Pair-wise testing is used to reduce the number of test cases required while still ensuring adequate test coverage. It is particularly useful when testing complex systems with many input variables, as it can help to identify interactions between inputs that may not be evident when testing individual inputs in isolation.

90. What is the difference between a stress test and a load test?

A stress test is a type of testing that evaluates how well a system can handle high stress or extreme conditions, such as high user traffic or excessive data input. The goal of stress testing is to identify the system’s breaking point or the point at which it no longer functions correctly. Load testing, on the other hand, is a type of testing that evaluates how well a system can handle normal or expected levels of user traffic or data input.

91. What is the concept of acceptance criteria?

Acceptance criteria refer to a set of conditions that a product or feature must meet to be considered acceptable or satisfactory by a stakeholder or customer.

92. What is the difference between ad-hoc testing and exploratory testing?

Ad-hoc testing involves testing without a pre-planned test strategy or plan, while exploratory testing involves testing with the goal of discovering new information and gaining a deeper understanding of the system. The main difference is that ad-hoc testing tends to be more random and less focused, while exploratory testing is more deliberate and structured.

93. How do you handle a situation where a test case is failing intermittently?

To handle an intermittent test failure, it’s important to gather as much information as possible about the failure, including any error messages, logs, or other relevant data. If possible, try to reproduce the failure and gather additional information. It may also be helpful to collaborate with other team members, such as developers or other testers, to identify the root cause of the failure and develop a plan to address it.

94. What is the concept of model-based testing?

Model-based testing involves using models or representations of the system being tested to generate test cases and automate the testing process. This approach can be particularly useful for complex systems, as it allows for more efficient and effective testing while reducing the risk of missing critical defects.

95. What is the difference between a defect-tracking tool and a test management tool?

A defect tracking tool is used to log and track defects or issues found during the testing process, while a test management tool is used to plan, design, and execute tests. While both types of tools can be useful in managing the testing process, they serve different functions and are often used in conjunction with one another.

96. How do you ensure that your test cases are maintainable?

To ensure that test cases are maintainable, it’s important to follow good testing practices such as using clear and concise test cases, avoiding duplication, and organizing test cases in a logical and consistent manner. It can also be helpful to use automation tools to streamline the testing process and reduce the risk of errors or inconsistencies.

97. What is the concept of a traceability matrix?

A traceability matrix is a document or tool that shows the relationship between different elements of the testing process, such as requirements, test cases, and defects. This can be useful for tracking progress, identifying gaps or inconsistencies, and ensuring that all requirements are adequately tested.

98. What is the difference between a test result and a test report?

A test result refers to the outcome of a specific test case or set of test cases, while a test report provides a more comprehensive overview of the testing process and results. A test report may include information such as the testing approach used, the number of tests executed, the number and severity of defects found, and recommendations for improvement.

99. What is the concept of equivalence class testing?

Equivalence class testing is a testing technique that involves dividing the input data into different groups or classes based on certain criteria, such as range, type, or behavior. By testing a representative sample from each class, testers can identify defects that may affect multiple inputs within the same class.

100. How do you handle a situation where there is not enough time to test everything?

When there is not enough time to test everything, it’s important to prioritize testing based on the most critical or high-risk areas of the system. This may involve working closely with stakeholders to identify the most important features or functions, as well as collaborating with developers to identify areas of the system that are most likely to contain defects. It may also be helpful to use automation tools to streamline.

If you want to increase your chances of excelling in your upcoming software testing job interview, it’s important to be well-equipped with the Top 100 software testing interview questions and answers. You can stay up-to-date with the latest information and expand your knowledge by following us at freshersnow.com.